Introduction: When Science Becomes a Marketplace

Science has always carried a reputation for rigor, integrity, and the slow, painstaking pursuit of truth. Researchers spend years—sometimes decades—trying to solve complex problems, test hypotheses, and refine results before sharing their findings with the world. In theory, published work represents a collective achievement built on honesty and peer review.

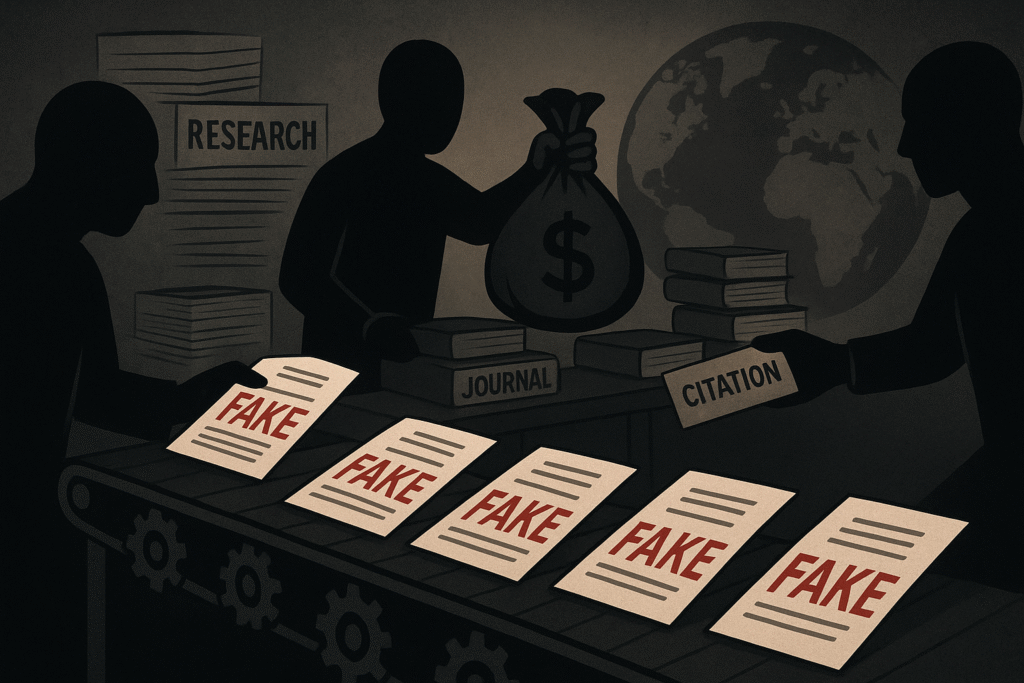

But in practice, something darker has taken root in academia: a sprawling black market for fake research. What was once whispered about in university hallways is now a global, billion-dollar underground industry. From paper mills churning out fabricated studies to predatory journals accepting anything for a fee, and citation cartels manipulating rankings, the “publish or perish” culture of academia has created fertile ground for corruption.

The damage is not abstract. Fake science doesn’t just distort academic careers—it influences public health policies, environmental laws, technology investments, and even the food on our plates. It erodes public trust in science at a time when misinformation already spreads faster than truth.

This long-form investigation dives deep into the mechanics of this hidden trade, why mathematics has become a surprising flashpoint, and how flawed incentives allow fraud to flourish. More importantly, it asks: can the global academic system ever break free from its addiction to numbers and rankings, and return to valuing knowledge itself?

The Rise of Metrics: How Science Became Obsessed With Numbers

Before understanding the black market, one must understand the rules of the game. In modern academia, metrics rule everything. A researcher’s worth is often reduced to how many papers they publish, how often their work is cited, and whether they appear in “prestigious” journals.

- The Impact Factor: Introduced in the 1970s, this score measures the average citations per paper in a journal. Originally meant to guide librarians on which journals to buy, it became a proxy for prestige.

- Citation Counts: The number of times a researcher’s work is referenced. High counts suggest influence—but they can be artificially inflated.

- University Rankings: Global rankings such as QS and Times Higher Education rely heavily on publication and citation data, making them vulnerable to manipulation.

- Researcher Labels: Clarivate’s Highly Cited Researchers (HCR) list identifies the top 1% by citations. Inclusion brings prestige, funding, and job offers.

The problem is that these numbers were never designed to be the sole measure of quality. Numbers compress complexity. A groundbreaking theorem and a meaningless, copy-pasted paper can count equally as “one publication.” When careers, funding, and global prestige are tied to these crude metrics, the temptation to game the system becomes overwhelming.

Case Study: The Mathematics Scandal That Exposed the Problem

Mathematics is often considered immune to fraud—after all, numbers don’t lie, right? But the discipline has unique vulnerabilities. Unlike medicine or biology, math papers tend to be shorter, with fewer authors, and lower citation counts. That means small manipulations can have massive effects.

In 2019, the cracks became impossible to ignore. The institution with the highest number of “Highly Cited Researchers” in mathematics was China Medical University in Taiwan (CMU). The catch? CMU doesn’t even have a mathematics department.

How was this possible? Through affiliation games and citation manipulation. Mathematicians—many with loose or honorary ties to CMU—were listed as affiliated, inflating the institution’s ranking. Edward Dunne’s audit of the 2019 HCR list revealed glaring anomalies:

- The median self-citation rate among these HCR mathematicians was more than double that of Fields Medalists and Abel Prize winners.

- Many citations came from low-quality mega-journals that accept thousands of papers per year.

- Networks of mathematicians were citing each other in closed loops—classic citation cartels.

This episode shocked the mathematics community and demonstrated how fragile bibliometric systems really are. It wasn’t an isolated case—it was a symptom of a much larger disease.

The Global Black Market of Fake Science

Behind the scandals lies a growing commercial ecosystem designed to sell shortcuts. It thrives in countries where academic promotion depends heavily on publication counts, but it is truly global.

1. Paper Mills: Factories of Fake Research

Paper mills are businesses that mass-produce fake studies for paying clients. For a fee, they will:

- Generate fabricated data sets.

- Write entire manuscripts.

- Guarantee placement in indexed journals.

- Add paying customers as “co-authors,” even if they never saw the paper.

A single paper mill can push thousands of fake studies into circulation annually. The content ranges from plagiarized reviews to entirely fraudulent experimental results.

2. Predatory Journals: Pay-to-Publish Outlets

Predatory journals exploit open-access publishing. Instead of charging readers, they charge authors hefty “article processing fees.” In return, they often skip peer review altogether, accepting anything that arrives with payment.

Warning signs include:

- Fake editorial boards (sometimes with scholars listed without consent).

- Flattering spam emails inviting submissions.

- Unrealistic acceptance times (sometimes under a week).

The tragedy is that many predatory journals still appear in major databases like Scopus and Web of Science, making them seem legitimate.

3. Citation Cartels: Trading Influence

Citation cartels are groups of researchers who systematically cite each other’s work to inflate their metrics. Journals sometimes pressure authors to cite articles from their own archives to boost their impact factors.

This practice distorts the natural flow of scientific influence. Instead of reflecting genuine engagement, citation counts become a currency traded in closed networks.

Why Fraud in Science Matters Beyond Academia

It would be easy to dismiss fraudulent publishing as an internal academic squabble—after all, why should the average citizen care if a few mathematicians or biologists inflate their citation counts? But the ripple effects are massive.

- Public Policy Distortion

Governments often rely on published research to guide policy. Fake studies on climate change, public health, or education can directly influence decisions worth billions. - Medical Risk

In fields like medicine, fraudulent studies can harm patients. Fake data on drugs or treatments can lead to dangerous clinical practices or wasted investment in useless therapies. - Economic Waste

Funding agencies spend billions annually on research. If grants are awarded based on manipulated metrics, genuine innovation loses out to flashy but meaningless work. - Erosion of Trust

At a time when public trust in science is already under assault from conspiracy theories and misinformation, fraudulent publishing adds fuel to the fire. Each scandal becomes ammunition for anti-science movements.

Red Flags: How to Spot Fake or Low-Quality Research

For readers, students, and even professionals, telling real science from fake can be daunting. But there are practical checks:

- Check indexing: Reputable math and science journals are indexed in curated databases like MathSciNet or zbMATH Open. If a journal is absent, be cautious.

- Look for unusual publishing patterns: Hundreds of papers in one “special issue” within weeks is suspicious.

- Editorial boards: Are the listed editors actually experts in the subject? Do they even know they’re on the board?

- Citation stuffing: Papers overloaded with references to the authors’ own work suggest manipulation.

- Generic solicitations: Real editors rarely email strangers inviting them to submit or guest-edit.

Rethinking Academic Incentives: Recommendations from Experts

The International Mathematical Union (IMU) and the International Council for Industrial and Applied Mathematics (ICIAM) issued a set of practical reforms:

- Read, don’t just count: Evaluators should actually read papers, not just tally publications.

- De-emphasize rankings: Stop outsourcing judgment to commercial ranking systems.

- Discourage volume for volume’s sake: Reward quality and originality, not sheer output.

- Transparency in publishing: Push for stricter database curation to weed out predatory journals.

- Collective action: Universities, funding bodies, and scholars must act together to reset incentives.

The key message: metrics are tools, not truths. Used responsibly, they can help guide research. Used blindly, they incentivize fraud.

Where Do We Go From Here?

The black market in science is unlikely to disappear overnight. The financial incentives are too strong, and the demand for easy publications is too high. But the tide may be turning.

Mathematics, ironically, may lead the way out. The culture of careful review and established community databases means reforms are possible. If universities and funders around the world begin rewarding genuine insight over numerical performance, the market for fake research will shrink.

Ultimately, the path forward is not to abandon measurement but to put it in perspective. Numbers can guide us, but they should never replace the careful human judgment that science requires. If we remember that truth, not citations, is the goal of research, then perhaps the scientific community can reclaim its integrity.

Conclusion: Science at a Crossroads

The black market in science is not a side issue—it is a global challenge that touches every aspect of knowledge production. From classrooms to clinics, from labs to legislatures, fake research corrodes the foundation of trust that societies place in science.

Yet, the solution lies not in despair but in reform. Read first, count second. Reward what matters, not what is easy to measure. If institutions worldwide commit to these principles, the market for fraudulent publishing will shrink, and science can once again live up to its highest ideals: the relentless, honest pursuit of truth.